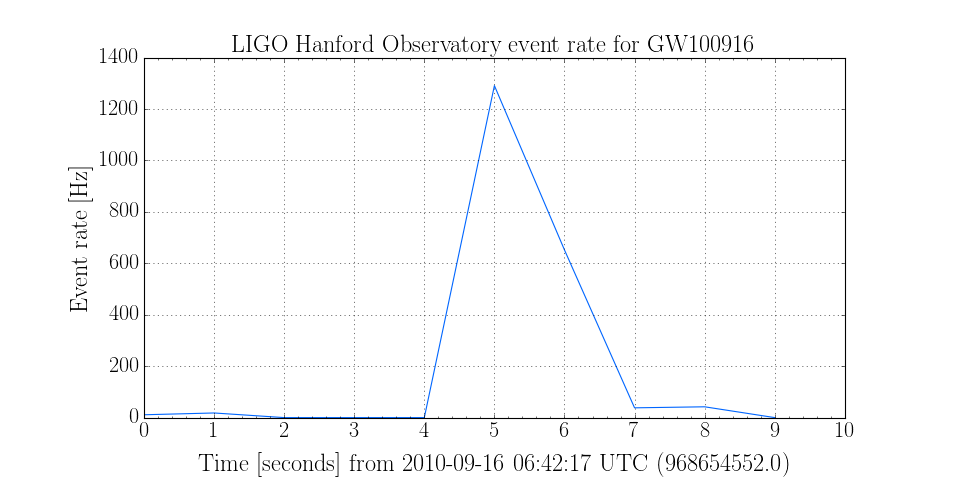

Calculating (and plotting) event rate versus time¶

I would like to study the rate at which event triggers are generated by the

ExcessPower gravitational-wave burst detection algorithm, over a small

stretch of data.

The data from which these events were generated are a simulation of Gaussian noise with the Advanced LIGO design spectrum, and so don’t actually contain any real gravitational waves, but will help tune the algorithm to improve detection of future, real signals.

First, import the SnglBurstTable:

from gwpy.table.lsctables import SnglBurstTable

and read a table of (simulated) events:

events = SnglBurstTable.read('../../gwpy/tests/data/'

'H1-LDAS_STRAIN-968654552-10.xml.gz')

We can calculate the rate of events (in Hertz) using the event_rate() method:

rate = events.event_rate(1, start=968654552, end=968654562)

The event_rate() method has returned a TimeSeries, so we can display this using the plot() method of that object:

plot = rate.plot()

plot.set_xlim(968654552, 968654562)

plot.set_ylabel('Event rate [Hz]')

plot.set_title('LIGO Hanford Observatory event rate for GW100916')

plot.show()

(Source code, png)