5. Plotting EventTable rate versus time for specific column bins¶

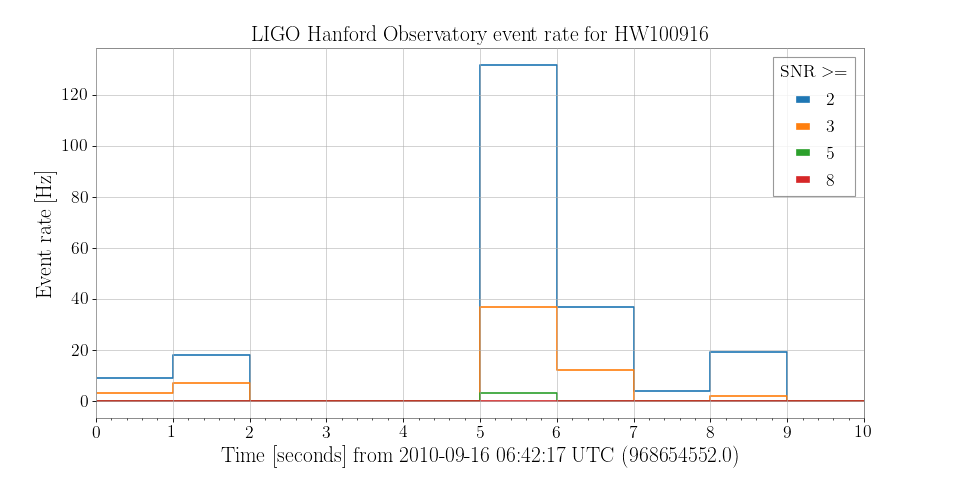

I would like to study the rate at which event triggers are generated by the

ExcessPower gravitational-wave burst detection algorithm, over a small

stretch of data, binned by various thresholds on signal-to-noise ratio (SNR).

The data from which these events were generated contain a simulated gravitational-wave signal, or hardware injection, used to validate the performance of the LIGO detectors and downstream data analysis procedures.

First, we import the EventTable object and read in a set of events from

a LIGO_LW-format XML file containing a

sngl_burst table

from gwpy.table import EventTable

events = EventTable.read('H1-LDAS_STRAIN-968654552-10.xml.gz',

tablename='sngl_burst', columns=['peak', 'snr'])

Note

Here we manually specify the columns to read in order to optimise

the read() operation to parse only the data we actually need.

Now we can use the binned_event_rates() method to

calculate the event rate in a number of bins of SNR.

rates = events.binned_event_rates(1, 'snr', [2, 3, 5, 8], operator='>=',

start=968654552, end=968654562)

Note

The list [2, 3, 5, 8] and operator >= specifies SNR tresholds of

2, 3, 5, and 8.

Finally, we can make a plot:

plot = rates.step()

ax = plot.gca()

ax.set_xlim(968654552, 968654562)

ax.set_ylabel('Event rate [Hz]')

ax.set_title('LIGO Hanford Observatory event rate for HW100916')

ax.legend(title='SNR $>=$')

plot.show()

(png)